Emre Ugur

Ph.D.

From Continuous Manipulative Exploration to Symbolic Planning

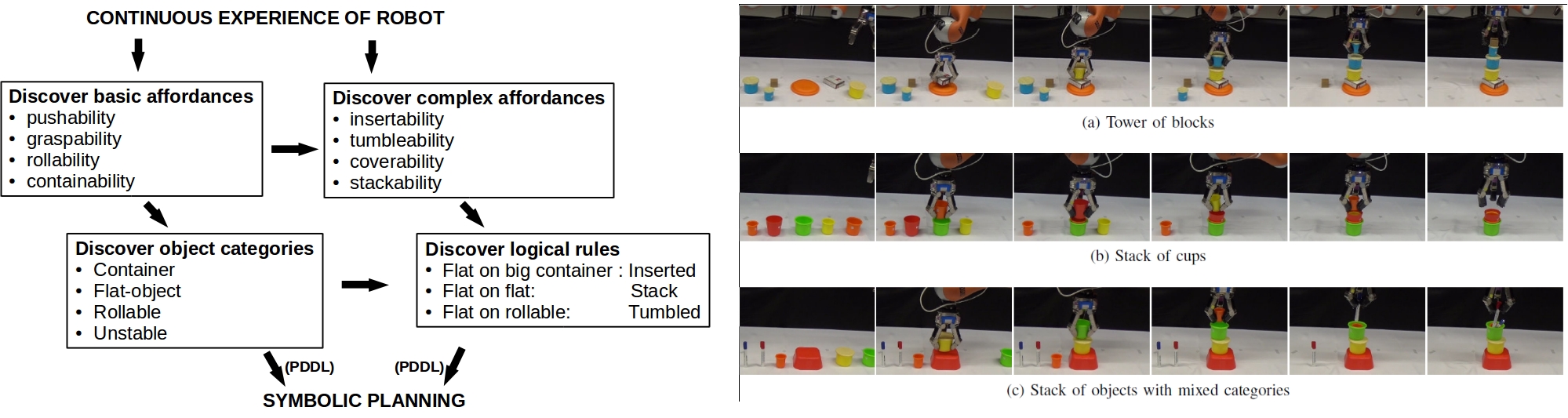

This work aims for bottom-up and autonomous

development of symbolic planning operators from continuous

interaction experience of a manipulator robot that explores

the environment using its action repertoire. Development of

the symbolic knowledge is achieved in two stages. In the

first stage, the robot explores the environment by executing

actions on single objects, forms effect and object categories,

and gains the ability to predict the object/effect categories from

the visual properties of the objects by learning the nonlinear

and complex relations among them. In the next stage, with

further interactions that involve stacking actions on pairs of

objects, the system learns logical high-level rules that return

a stacking-effect category given the categories of the involved

objects and the discrete relations between them. Finally, these

categories and rules are encoded in Planning Domain Definition

Language (PDDL), enabling symbolic planning.We realized our

method by learning the categories and rules in a physics-based

simulator. The learned symbols and operators are verified by

generating and executing non-trivial symbolic plans on the real

robot in a tower building task. (ICRA2015.pdf)

Next, the robot progressively updated the

previously learned concepts and rules in order to better deal

with novel situations that appear during multi-step action

executions. It inferred categories of the

novel objects based on previously learned rules, and form new

object categories for these novel objects if their interaction

characteristics and appearance do not match with the existing

categories. Our system further learns probabilistic rules that

predict the action effects and the next object states.

After learning, the robot was able to build stable towers in real

world, exhibiting some interested reasoning capabilities such as

stacking larger objects before smaller ones, and predicting that

cups remain insertable even with other objects inside.

(humanoids2015.pdf)

Robot Videos: ICRA & Humanoids

|

Bootstrapped learning with Emergent Structuring of interdependent affordances

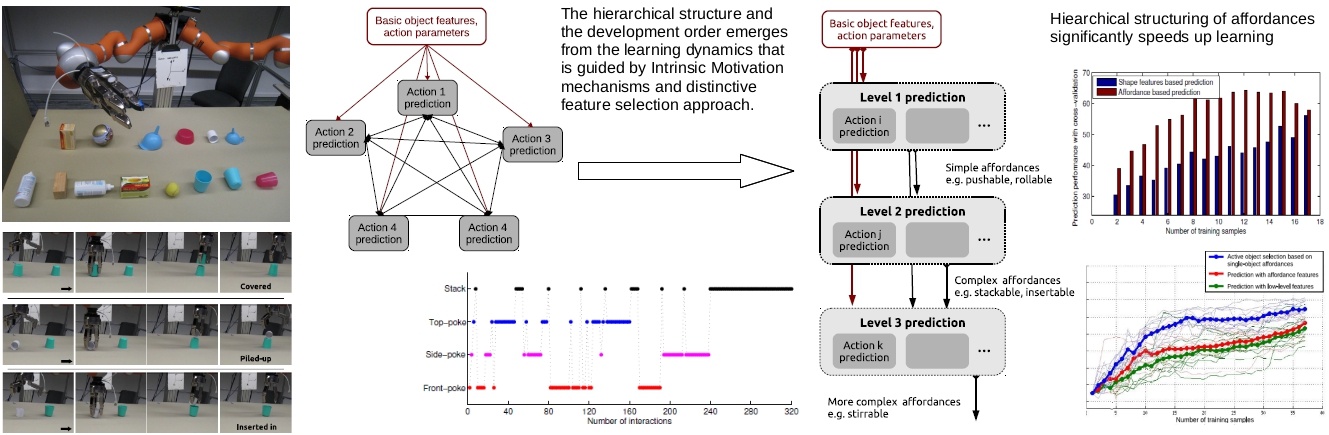

It was shown that human infants develop a set of action primitives such as grasping,

hitting and dropping for single objects by 9 months, and later start exploring actions that involve

multiple-objects. For example, before 18 months they cannot perceive the correspondence between

different shaped blocks and holes and are unsuccessful in inserting blocks in

different shapes. This data suggests that there exists a developmental

order where infants first develop basic skills that are precursors of combinatory manipulation

actions. They also probably use the learned action grounded object properties

in further learning of complex actions.

Here we propose a learning system for a developmental robotic system that benefits from bootstrapping, where learned simpler structures (affordances) that encode robot's interaction dynamics with the world are used in learning of complex affordances. We showed that a robot can benefit from a hierarchical structuring, where pre-learned basic affordances are used as inputs to bootstrap the learning performance of complex affordances (ICDL2014-Bootstrapping.pdf). A truly developmental system, on the other hand, should be able to self-discover such a structure, i.e. links from basic to related complex affordances, along with a suitable learning order. In order to discover the developmental order, we use Intrinsic Motivation approach that can guide the robot to explore the actions it should execute in order to maximize the learning progress. During this learning, the robot also discovers the structure by discovering and using the most distinctive object features for predicting affordances. We implemented our method in an online learning setup, and tested it in a real dataset that includes 83 objects and large number of effects created on these objects by three poke and one stack action. The results show that the hierarchical structure and the development order emerged from the learning dynamics that is guided by Intrinsic Motivation mechanisms and distinctive feature selection approach (ICDL2014-EmergentStructuring.pdf).

Robot Video

Here we propose a learning system for a developmental robotic system that benefits from bootstrapping, where learned simpler structures (affordances) that encode robot's interaction dynamics with the world are used in learning of complex affordances. We showed that a robot can benefit from a hierarchical structuring, where pre-learned basic affordances are used as inputs to bootstrap the learning performance of complex affordances (ICDL2014-Bootstrapping.pdf). A truly developmental system, on the other hand, should be able to self-discover such a structure, i.e. links from basic to related complex affordances, along with a suitable learning order. In order to discover the developmental order, we use Intrinsic Motivation approach that can guide the robot to explore the actions it should execute in order to maximize the learning progress. During this learning, the robot also discovers the structure by discovering and using the most distinctive object features for predicting affordances. We implemented our method in an online learning setup, and tested it in a real dataset that includes 83 objects and large number of effects created on these objects by three poke and one stack action. The results show that the hierarchical structure and the development order emerged from the learning dynamics that is guided by Intrinsic Motivation mechanisms and distinctive feature selection approach (ICDL2014-EmergentStructuring.pdf).

|

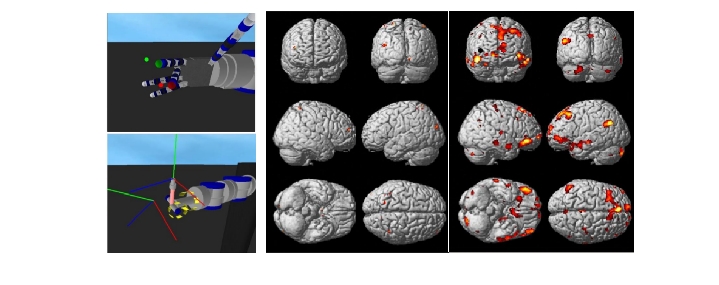

Brain Mechanisms in Antropomorphic/Non-Antropomorphic Tool Use

A tool (a rake allowing the manipulation of objects out of reach)

is grabbed by a trained monkey, parietal neurons with receptive fields

on and around the hand expand their receptive fields to represent the tool.

Since the human gains the ability to manipulate objects

using a robot, can we project from

the finding mentioned above that the body schema of a human

operator expands to include the

robot in the course of controlling it? And if so, what are

properties of this body schema expansion.

Does the control of all kind of robot morphology induce similar

changes in the brain? To investigate these questions we designed an fMRI experiment. Our

hypothesis is, that the anthropomorphicity of the robot to be controlled would be critical

to how it was represented in the brain.

|

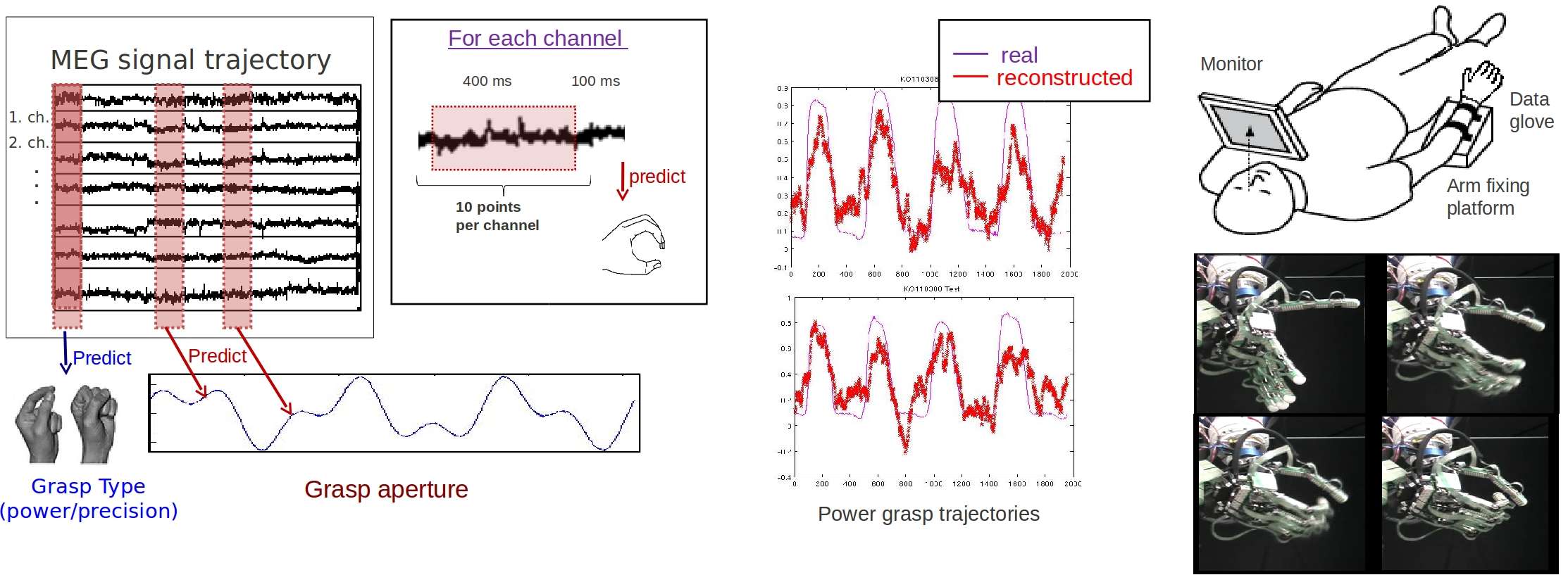

Reconstruction of Grasp Posture from MEG Brain Activity

We aim at both decoding the grasp

type (power vs. precision pinch) and reconstructing the aperture size

based on MEG signals obtained while the subjects performed repetitive

flexion and extension finger movements corresponding to either

precision pinch or power grasp. During the movements, finger joint

angles were recorded along with MEG signals using an MEG compatible

data glove. For learning the mapping between MEG signals and grasp

types, support vector machines with linear kernel is used. For

reconstructing the aperture size, a sparse linear regression method is

used. (poster)

|

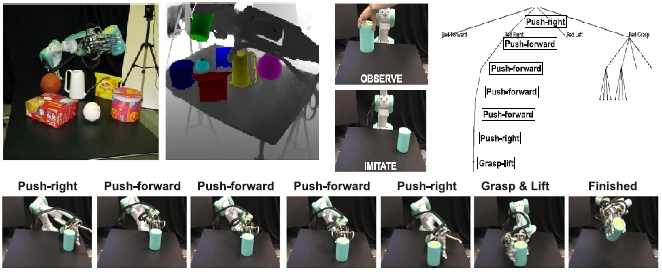

Learning Object Affordances and Planning in Perceptual Space

The objective of this research topic is to enable an antropomorphic

robot to learn object affordances through self-interaction and

self-observation, similar to exploring infants of 7-10 months age.

In the first step of learning, the robot discovers commonalities in its

action-effect experiences by discovering

effect categories. Once the effect categories are discovered, in

the second step, affordance predictors for

each behavior are obtained by learning the mapping from the object

features to the effect categories.

After learning, the robot can make plans to achieve desired goals,

emulate end states of demonstrated

actions, monitor the plan execution and take corrective actions

using the perceptual structures employed

or discovered during learning.

(videos)

|

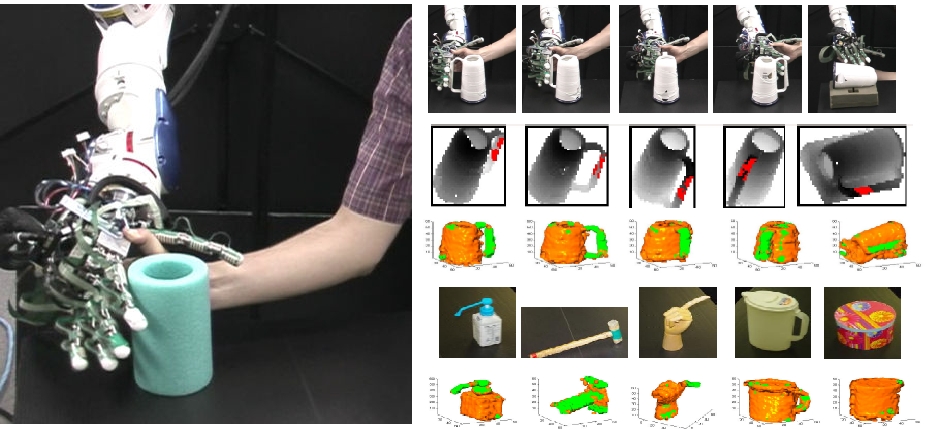

Learning Grasp Affordances through Parental-Scaffolding

Parental scaffolding is an important mechanism

utilized by infants during their development. A robot with the

basic ability

of reaching for an object, closing fingers and lifting its hand

lacks knowledge of which parts of the object affords grasping,

and in which hand orientation should the object be grasped.

During reach and grasp attempts, the movement of the robot

hand is modified by the human caregiver’s physical interaction

to enable successful grasping. Although the human caregiver does

not directly show the graspable regions, the robot should be able to

find regions such as handles of the mugs after its action execution

was partially guided by the human.

(videos)

|

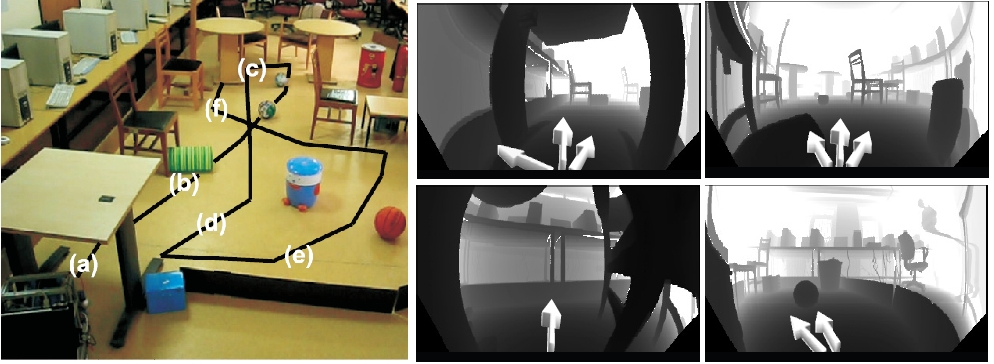

Learning Traversability Affordances

We studied how a mobile robot,

equipped with a 3D laser scanner, can learn to perceive the

traversability affordance and use it to wander in a room filled

with spheres, cylinders and boxes. The results showed that after

learning, the robot can wander around avoiding contact with

non-traversable objects (i.e. boxes, upright cylinders, or lying

cylinders in certain orientation), but moving over traversable

objects (such as spheres, and lying cylinders in a rollable

orientation with respect to the robot) rolling them out of its

way.

(videos)

|