Emre Ugur

Ph.D.

Active projects

Acronym: INVERSE

Duration: 01.2024 - 01.2028

Funded by: European Union, RIA, HORIZON-CL4-2023-DIGITAL-EMERGING-01-01

Code: 101136067

Budget: 745,000 Euro

The INVERSE project aims to provide robots with these essential cognitive abilities by adopting a continual learning approach. After an initial bootstrap phase, used to create initial knowledge from human-level specifications, the robot refines its repertoire by capitalising on its own experience and on human feedback. This experience-driven strategy permits to frame different problems, like performing a task in a different domain, as a problem of fault detection and recovery. Humans have a central role in INVERSE, since their supervision helps limit the complexity of the refinement loop, making the solution suitable for deployment in production scenarios. The effectiveness of developed solutions will be demonstrated in two complementary use cases designed to be a realistic instantiation of the actual work environments.

Acronym: DEEPPLAN

Duration: 01.2025 - 02.2027

Funded by: Scientific and Technological Research Council of Turkey (TUBITAK)

Role: Principal Investigator

Code: 124E227

Bogazici Budget: 1,650,000 TL

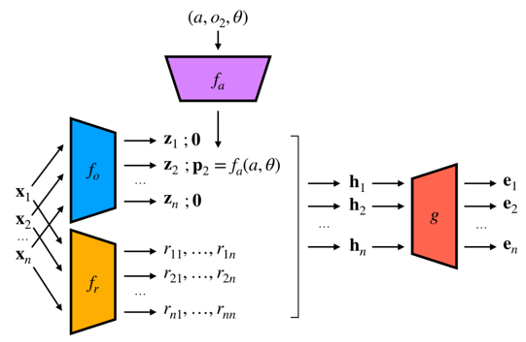

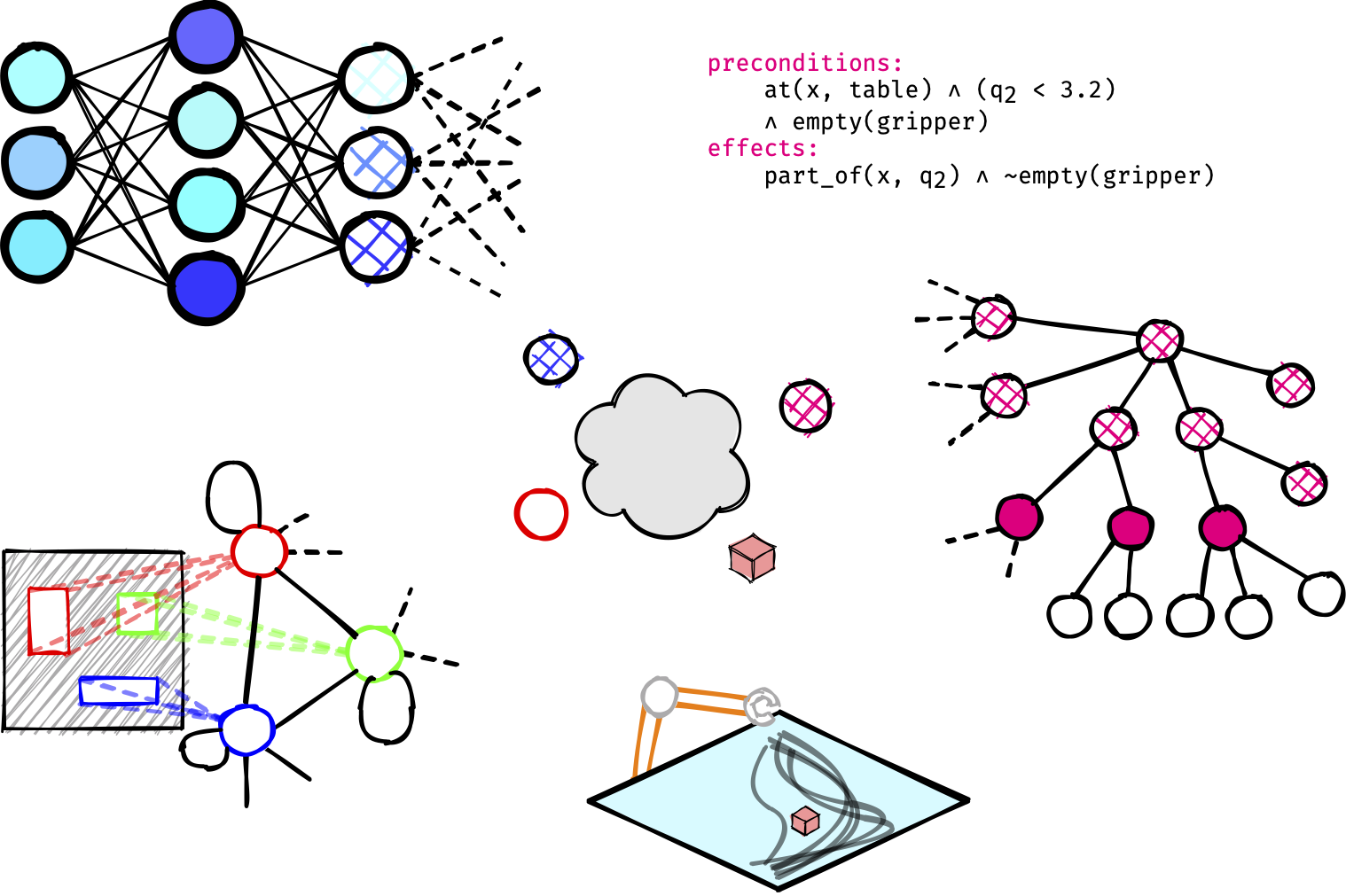

In this project, we realize mechanisms for the robots that enable them to discover both high-level low-dimensional discrete object and action primitives and their associated operators, and to learn low-level high-dimensional continuous predictive models that are used in both high and low-level planning and verification. To the best of our knowledge, simultaneous discovery of object, effect, and action categories, learning their associated operators, and using these operators for planning has not been studied in the literature yet. Different deep neural network architectures are proposed for the discovery of action primitives. Firstly, we suggest passing the sensorimotor trajectory into a conditional neural process network, taking the sensorimotor trajectory as input and extracting the discrete category of the motor component through a discrete bottleneck layer. Alternatively, using the same network structure, we propose to create both continuous and discrete latent representations, and suggest using discrete representation to filter continuous data, thus allowing categories to emerge in the discrete representation. Finally, we propose a structure that obtains both object and action categories using Attention architecture and discrete activation layers.

Completed projects

Acronym: DEEPSYM

Duration: 02.2021 - 02.2024

Funded by: Scientific and Technological Research Council of Turkey (TUBITAK)

Role: Principal Investigator

Code: 120E274

Project Web Page

Bogazici Budget: 720,000TL

Abstraction and abstract reasoning are among the most essential characteristics of high-level intelligence that distinguishes humans from other animals. High-level cognitive skills can only be achieved through abstract concepts, symbols representing these concepts, and rules that express relationships between symbols. This project aims to self-discover abstract concepts, symbols, and rules that allow complex reasoning by the robot. If the robots can achieve such abstract reasoning on their own, they can perform new tasks in completely novel environments by updating their cognitive skills, or by discovering new symbols and rules. If the objectives of this project are achieved, scientific foundations will be laid for robotic systems that learn life-long lasting symbols and rules through self-interacting with the environment and express various sensory-motor and cognitive tasks in a single framework.

Title: Robots Understanding Their Actions by Imagining Their Effects

Acronym: IMAGINE

Duration: 01.2017 - 02.2022

Funded by: European Union, H2020-ICT

Code: 731761

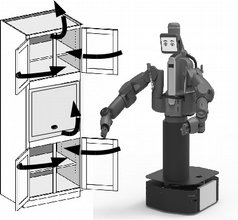

Budget: 365,000 Euro

Today's robots are good at executing programmed motions, but they do not understand their actions in the sense that they could automatically generalize them to novel situations or recover from failures. IMAGINE seeks to enable robots to understand the structure of their environment and how it is affected by its actions. The core functional element is a generative model based on an association engine and a physics simulator. "Understanding" is given by the robot's ability to predict the effects of its actions, before and during their execution. This scientific objective is pursued in the context of recycling of electromechanical appliances. Current recycling practices do not automate disassembly, which exposes humans to hazardous materials, encourages illegal disposal, and creates significant threats to environment and health, often in third countries. IMAGINE will develop a TRL-5 prototype that can autonomously disassemble prototypical classes of devices, generate and execute disassembly actions for unseen instances of similar devices, and recover from certain failures.

Duration: 02.2020 - 01.2023

Funded by: Scientific and Technological Research Council of Turkey (TUBITAK)

Role: Principal Investigator

Code: 118E923

Bogazici Budget: 140,000 Euro

In this project, a novel wearable exoskeleton with flexible clothing will be developed for the use of paraplegic persons who have lost their lower extremity motor functions due to low back pain, paralysis and similar disturbances. This prototype will be designed as a rigid-link exoskeleton that is actuated via series-elastic actuators. The user’s physical state will be observed using soft elements attached to a wearable sensorized clothing, which will be used in conjunction with the exoskeleton. Trajectory planning will be explored by a human-to-robot skill transfer problem to minimize the metabolic cost. Stability and balance analyses will run, and environmental factors will be estimated to provide safe walking support to the human using a hierarchical control structure.

Duration: 11.2019 - 10.2020

Funded by: International Joint Research Promotion Program, Osaka University

Role: Principal Investigator

Code:

Bogazici Budget: 10,000 Euro

In this project, we study the novel hypothesis that action understanding can develop bottom-up with ‘effect’ based representation of actions without requiring any coordinate transformation. We may call the computational model to be developed as ‘ Effect Based Action Understanding Model’. We adopt a computational brain modeling approach and validate our findings on embodied systems (i.e. robots). Once the skill can be developed bottom-up, we argue that the action understanding skill has the power to facilitate the development of perspective transformation skill (as opposed to the general belief). In the project, the hypothesis will be tested on robots, and the models will be implemented in a biologically relevant way. This way our model will be able to address the so called mirror neurons that are believed to represents action of others and the self in a multimodal way.

Title: Using multimodal data from immersive Virtual Reality (VR) environments for investigating perception and self-regulated learning

Duration: 10.2021-11.2022

Funded by: Bogazici University Research Fund

Role: Researcher

Code: 18321 / 21DR1

Bogazici Budget: 200,000 TL

With recent breakthroughs in Virtual Reality technology, VR has the power to redefine the way we interact with digital media. VR provides an environment for the curious to explore the limits of body perception, its effects on interactivity in Human-Computer Interaction, and extend the form and nature of learning experiences. As well as their contributions to basic science, the insights we gain from VR research can also be applied in numerous fields, including education and robotics. In fact, it is this perspective and multidisciplinary approach that brought us, researchers from multiple disciplines, together. The proposal explained further in the following sections consist of multiple studies. Our general aims in this project are: (1) to set up a multidisciplinary Virtual Reality Laboratory at Boğaziçi University; (2) to conduct basic research on visuo-motor coordination, avatar representation, body ownership and body-schema in order to have a better understanding of the brain in action; and (3) to investigate the ways in which multimodal data can be used for studying self-regulated learning and explore new ways in which we can apply VR technologies into the field of education in order to improve preservice teachers’ (and students’) learning.

Title: Design of Cognitive Mirroring Systems Based on Predictive Coding

Duration: 01.2019 - 03.2020

Funded by: Japanese Science and Technology Agency (JST)

Code:

Bogazici Budget: 45,000 Euro

Acronym: SEGMENT

Duration: 06.2020 - 05.2021

Funded by: Bogazici University Research Fund

Budget: 54,000 TL (7,200 Euro)

Code: 16913

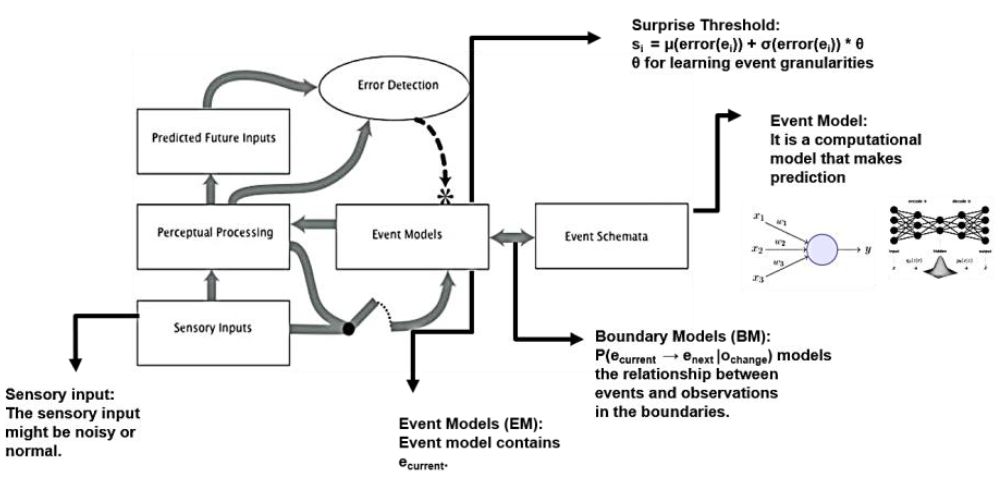

Event is a fuzzy term that refers to a closed spatio-temporal unit. The aim of the project is to develop a computational model that can learn event models and use learned event models to segment ongoing activities in varying granularities and compare its performance with human subjects. By doing so, we aim to clarify the effect of the reliability of sensory information and expectation on event segmentation performance by several experiments through our computational model and to develop a computational model that is capable of learning, segmenting and representing new events while being robust to noise. In addition to comparing human event segmentation performance with that of the computational model, we plan to design a new validation method to increase the reliability of assumptions of the computational model in terms of validating the psychological theory and assessing how well the computational model performs in terms of capturing human event representations. Results of our experiments and our computational model will be used to validate predictions of a psychological theory, namely Event Segmentation Theory, and to develop robotics models that are capable of simulating higher-level cognitive processes such as action segmentation in different granularities and formation of concepts representing events.

Acronym: IMAGINE-COG++

Duration: 06.2018 - 06.2019

Funded by: Bogazici University Research Fund

Budget: 36,000 TL

Code: 18A01P5

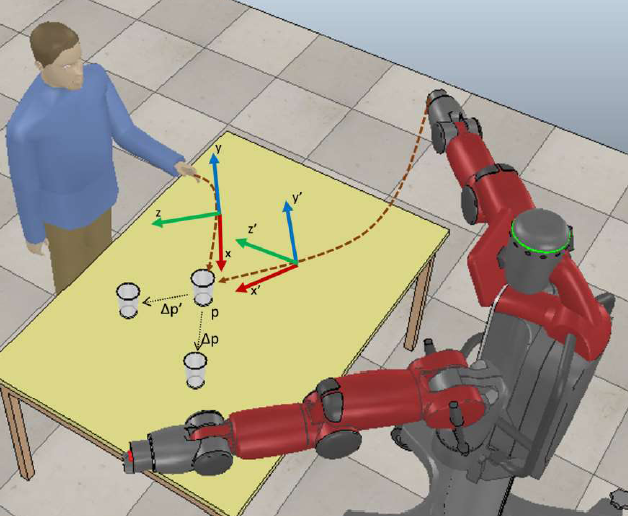

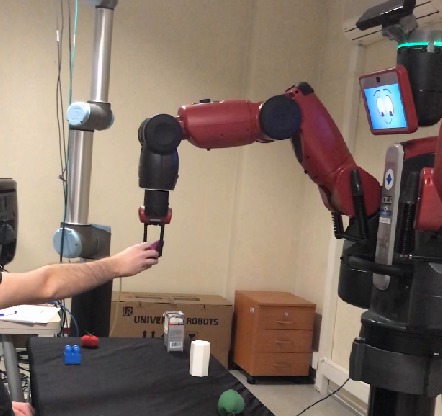

In this research project, we aim to design and implement an effective robotic system that can infer others' goals from their incomplete action executions, and that can help others achieving these goals. Our approach is inspired from helping behavior observed in infants; exploits robot's own sensorimotor control and affordance detection mechanisms in understanding demonstrators' actions and goals; and has similarities with human brain processing related to control and understanding of motor programs. Our system will follow a developmental progress similar to infants, whose performance in inferring goals of others' actions is closely linked to development of their own sensorimotor skills. At the end of this project, we plan to verify whether our developmental goal inference and helping strategy is effective or not through human-robot interface experiments using upper body Baxter robot in different tasks.

Duration: 14.03.2017 - 01.02.2019

Funded by: TUBITAK 2232, Return Fellowship Award

Code: 117C016

Budget: 108,000 TL

Bu proje ile, ortamın robota sunduğu sağlarlıkları (affordances) öğrenip modelleyerek sağlarlıklar ve sensör geribildirimleri ile desteklenen gelişmiş bir manipülasyon beceri sistemini kurmayı hedeflemekteyiz. Bu tip ortamlardaki `tutmak', `taşımak' ve `yerleştirmek' gibi eylemler tipik oldukları için, hareketleri, gösterim yolu ile öğrenme (learning by demonstration) ile robota aktarmayı planlamaktayız. Bu yolla yarı-yapısal ortamlar için gerekli manipülasyon becerilerini öğrendikten sonra, robot, ortamın sunduğu görsel ve diğer sağlarlıkların, bu becerilerin yürütülmesini nasıl etkilediğini öğrenmelidir.

Duration: 08.2016 - 08.2017

Funded by: Bogazici University Research Fund

Budget: 55,000 Euro

The aim of this project is to start forming a new cognitive and developmental robotics research group in Bogazici University with a special emphasis on intelligent and adaptive manipulation. This start-up fund will be used to build the laboratory with the most important and necessary setup that includes a human-friendly robotic system for manipulation (Baxter robot), a number of sensors for perception, and a workstation for computations and control.

Open student projects for CMPE 492

- The topics are not limited with the ones below. You are free to suggest your own project description with the state of the art robots (Baxter, Sawyer, NAO) in our lab!

- SERVE: See-Listen-Plan-Act

In this project, you will integrate state-of-the-art DNN based object detection and classification systems for perception, existing libraries for speech recognition, grounded conceptual knowledge base for language interpretation, a planner for reasoning and robot actuators for achieving the given goals. Initially, we plan to investigate use of YOLO real-time object detection system, PRAXICON for translation from natural language instructions to robot knowledge base, PRADA engine for probabilistic planning, and Caf-feNet Deep Convolutional Neural Networks fine-tuned for robotic table-top settings.

- NAO Robot Avatar:

In this project, you will implement a system that enables seeing from NAO's eyes and moving with NAO's body. NAO's motions will be copied through utilizing an adapted whole-body tracking system, and the robot camera images will be displayed on a head-mount display system. This system will enable full embodiment, and will be used for a very fruitful research direction: Utilizing robot avatars to understand the underlying mechanisms of human sensorimotor processes through changing different aspects of the embodiment.

- Graphical User Interface for Baxter Robot:

The aim of this project is to implement a GUI to control Baxter robot. Through its user interface, we expect to move joints seperately, move the hand to a specific position, open and close the grippers, and display the sensors such as force/torque sensor, camera and depth data.

- HRI: Robot control and communication through speech:

The aim of this project is to integrate existing speech processing and synthesis tools to communication with the Baxter robot. English and Turkish languages will be used in communications, in setting tasks or in getting information from the robot. The robot's voice based communication skills will be reinforced with various interfaces including emotional faces displayed on the tablet.

- Robot simulation and motion control for Sawyer: Operating a real robot can be cumbersome, risky and slow. Therefore, it is often helpful to be able to simulate the robot. Moreover, if a robot needs to move its hand into a desired target, it should not simply follow any path from its current position because it may hit an obstacle. Therefore, the robot needs to plan a path from its current pose to the target pose. The objective of this project is to create a realistic kinematic, volumetric and dynamic model of the Sawyer robot platform, to adapt a number of motion planning packages for Sawyer, and finally implement a benchmark task such as a pick-and-place operation across an obstacle.